There is a feature built into ZFS called the “hotspares” feature which allows a sysadmin to identify those drives available as spares which can be swapped out in the event of a drive failure in a storage pool. If an appropriate flag is set in the feature, the “hot spare” drive can even be swapped automatically to replace the failed drive. Or, alternatively, a spare drive can be swapped manually if the sysadmin detects a failing drive that is reported as irreparable.

Hot spares can be designated in the ZFS storage pool in two separate ways:

- When the pool is created using the zpool create command, and

- After the pool is created using the zpool add command.

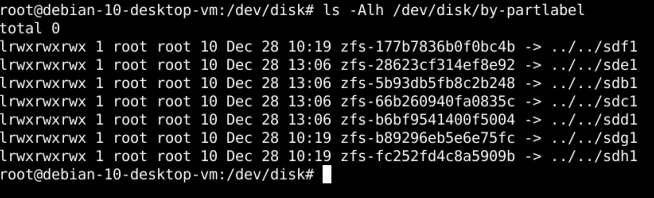

Before beginning to create the ZFS storage pool and identifying the spares, I want to list the available drives in my Debian 10 Linux VM in VirtualBox 6.0. I can accomplish this in the Terminal using the command:

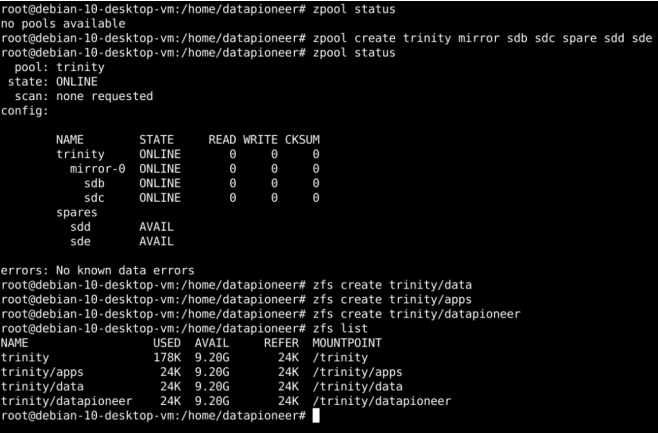

Here, we see that I have 7 total drives apart from the primary drive, /dev/sda, used by Debian 10 Linux. These drives are listed above and range from /dev/sdb … to /dev/sdh. Therefore, I plan to create a ZFS storage pool called trinity with a mirror of two drives: sdb and sdc; and hot spares which I will identify as sdd and sde. The diagram below illustrates the new ZFS mirrored storage pool with the two spares identified. In addition, I have taken the liberty of recreating the trinity/data, …/apps, and …/datapioneer datasets:

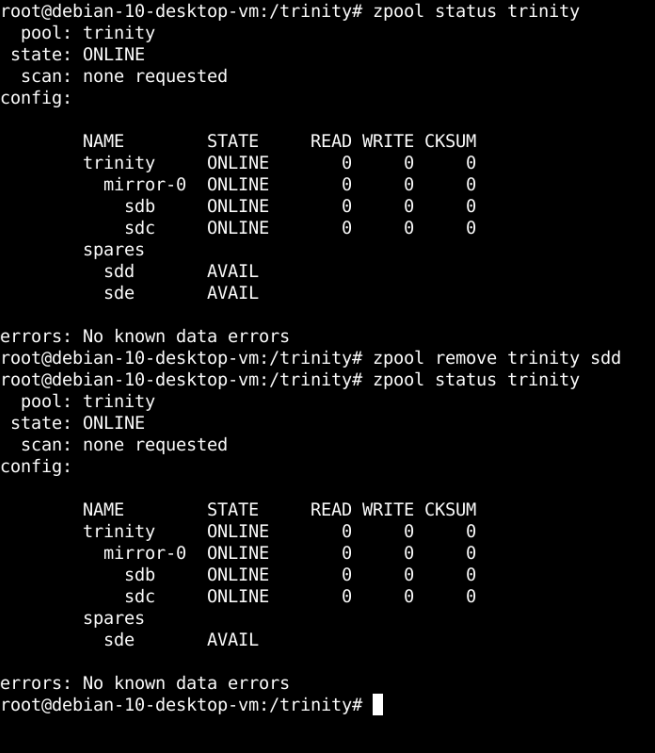

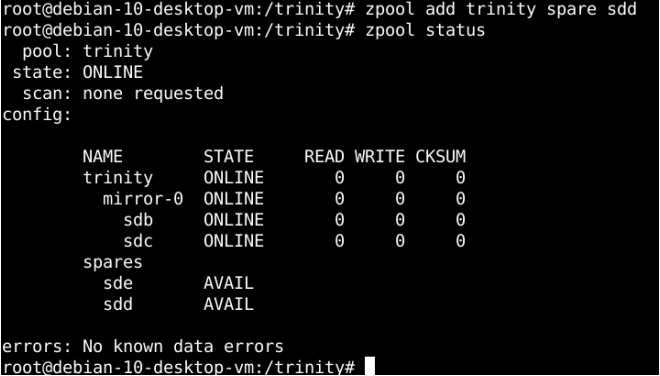

As reported above using the zpool status or zpool status trinity command, the two mirror drives sdb & sdc are ONLINE and I have identified two AVAIL spares sdd & sde. If I want to remove one of the hotspares and replace it with another drive, I can do this using the zpool remove command:

Since the spare drive was not INUSE but was AVAIL instead, we were able to remove it. Had it been INUSE, this wouldn’t be allowed. Instead, the drive would have to be manually taken OFFLINE first, then removed. One important thing to keep in mind here is that drives identified as spares in the system must have a drive capacity >= largest drive in the storage pool. Let’s add another spare to the storage pool by replacing the drive sdd that we previously removed. This is accomplished by running the zpool add trinity spare command and inserting the sdd drive designated as the replacement:

As an aside, if at anytime you wish to determine the health of a ZFS storage pool, you can either run the zpool trinity status command or zpool trinity status -x command. The latter will actually list the health so you don’t have to look at the STATE category of the status screen:

root@debian-10-desktop-vm:/trinity# zpool status trinity -x pool ‘trinity’ is healthy

Now, if an ONLINE drive fails, the zpool will move into a DEGRADED state. The failed drive can then be replaced manually by the sysadmin or, if the sysadmin has set the autoreplace=on in the property of the zpool, the failed drive will be automatically replaced by ZFS. The command to set this property in this example is:

root@debian-10-desktop-vm:/trinity# zpool set autoreplace=on trinity

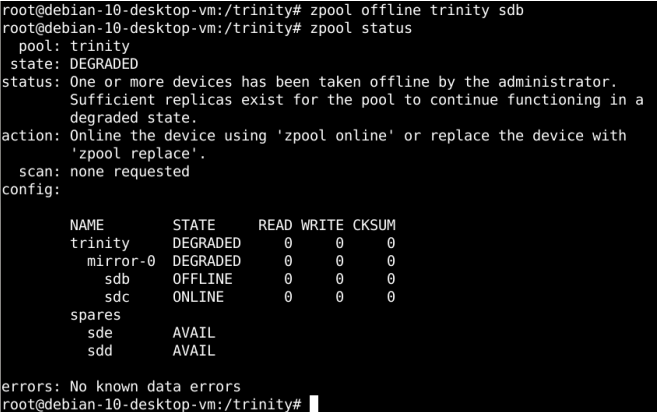

We can simulate the failure of one of our drives by manually taking it OFFLINE. So, let’s pretend that drive sdb fails by manually OFFLINE’ing it:

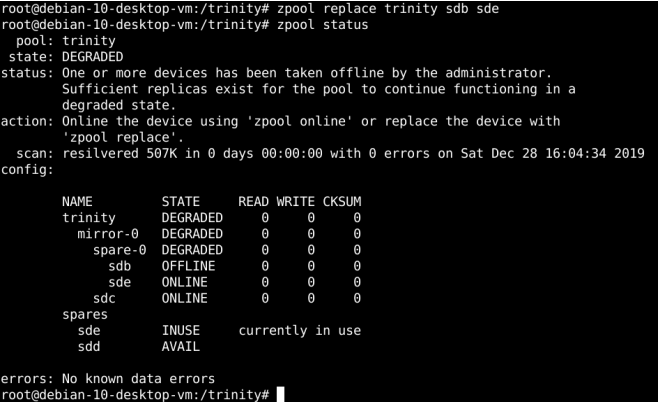

Note here that sdb now shows DEGRADED in the status and the overall status of the Zpool Trinity is in a DEGRADED State as well. If this had been a real world scenario where a drive failure actually occurred instead of simulating the failure by OFFLINE’ing the drive, one of the AVAIL spares would have replaced the failed drive, sdb, with either sde or sdd, reducing the AVAIL spares by one and bringing the Zpool back to ONLINE status. I can either ONLINE the device, sdb, since I know it to be a good drive, or I can simulate replacing this drive with one of the spares but doing so through a manual rather than automatic process. I will demonstrate the latter process using the zpool replace command:

Now, the current status after running the zpool replace trinity sdb sde command to replace the failed sdb drive with the hotspare sde indicates that spare sde is currently INUSE rather than AVAIL and sde is ONLINE.

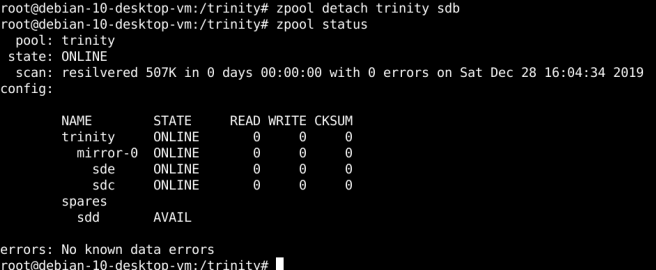

And, finally, if we detach the failed (OFFLINE) drive using the zpool detach trinity sdb command, the status of the Zpool storage pool should be returned to ONLINE in healthy status:

Note, now the AVAIL spares has been reduced to sdd only and sde has replaced the failed sdb drive which was formerly OFFLINE. The drive was resilvered with 507K bytes with 0 errors returned and this process was accomplished on Sat Dec 28 2019 at 16:04:34 Local time. 7/7

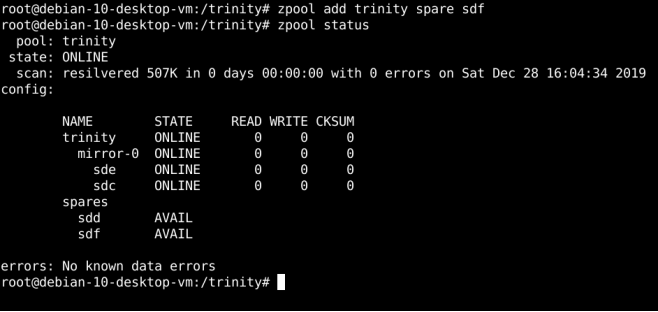

The last thing that we can do here before wrapping up this article is to add another drive to the “hot spares” list. We know from the previous command that we ran that we have drive sdf available for this purpose. So, let’s add this drive as a spare. Since the drive, sdf, is being added after the ZFS storage pool was created, we can use the zpool add trinity spare sdf command to add this drive as an AVAIL spare:

Now, we have two spares AVAIL instead of the single drive and our ZFS storage pool is healthy once again.

- Log in to post comments